by Derek Bruff, visiting associate director

Last Friday, CETL co-sponsored an online workshop titled “Generative AI on the Syllabus” with our parent organization, the Academic Innovations Group (AIG). Bob Cummings, executive director of AIG, and I spent an hour with 170 faculty, staff, and graduate students exploring options for banning, embracing, and exploring generative AI tools like ChatGPT, Bing Chat, and Google Bard in our fall courses.

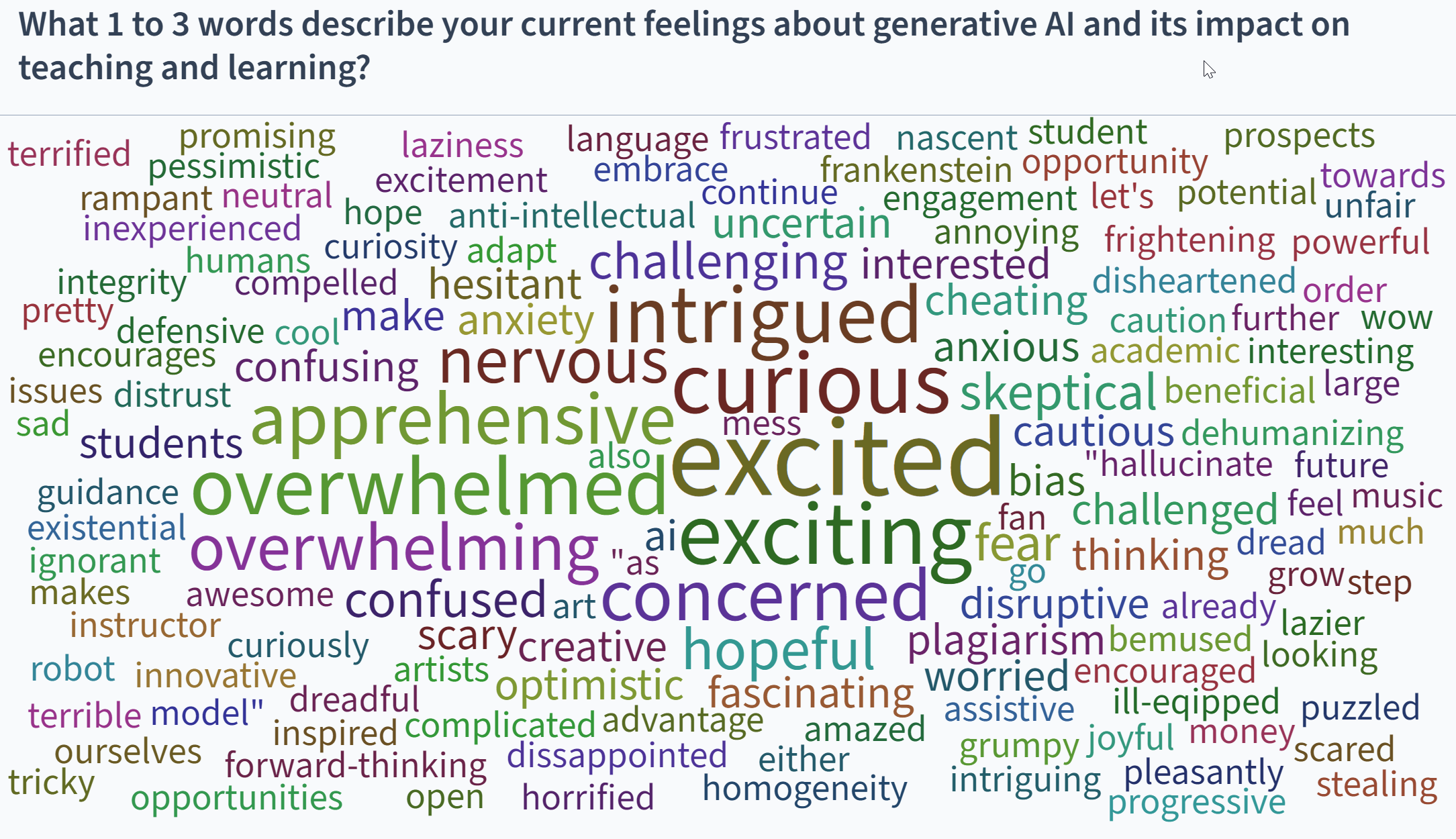

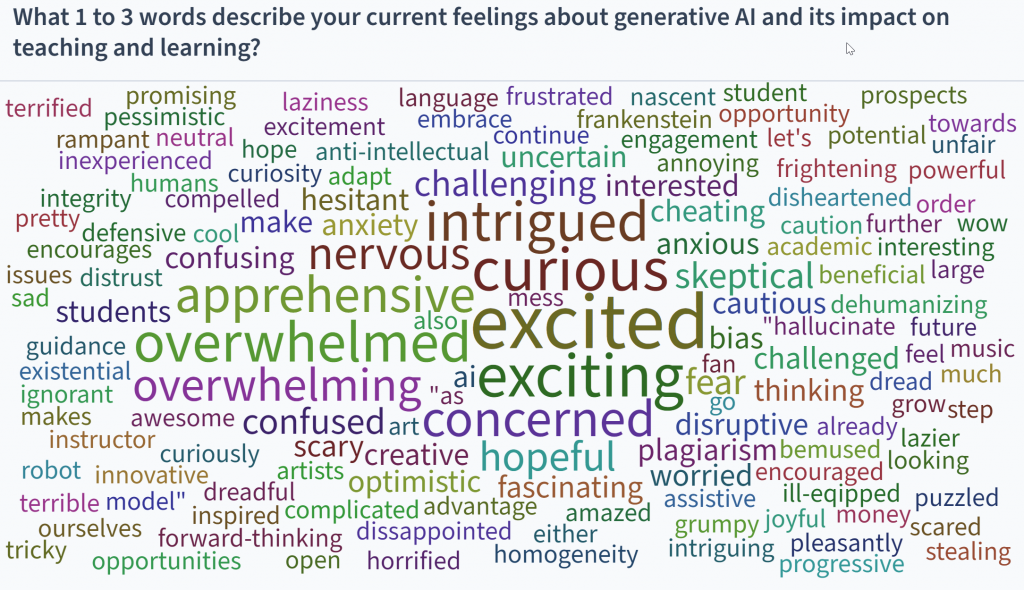

After starting the session providing a brief overview of the current landscape of generative AI tools, I asked participants to provide a few words describing how they were feeling about generative AI and its impact on teaching and learning. The resulting word cloud (seen below) is full of words like excited, curious, and intrigued, but also apprehensive, concerned, and overwhelmed. It was clear to me that the instructors present on Friday hadn’t completely figured out their approach to generative AI for the fall.

Bob and I then shared the CETL Syllabus Template, a document that has suggested syllabus language, information about UM policies, and links to course design resources. My CETL colleague Emily Donahoe led a small team of us this summer in developing a new section of that template focused on generative AI. If you’re still working on your AI policy for the fall, I high recommend opening that document and scrolling to page 9 for some thoughtful options to consider. (Please note: We have released AI section of the Syllabus Template under a Creative Commons license so that those outside of the University of Mississippi can use and adapt it as they like.)

For example, if you’re leaning toward prohibiting the use of AI text generators in your course, you might use the suggested syllabus language under the heading “Use of Generative AI Not Permitted”:

Generative AI refers to artificial intelligence technologies, like those used for ChatGPT or Midjourney, that can draw on a large corpus of training data to create new written, visual, or audio content. In this course, we’ll be developing skills that are important to practice on your own. Because use of generative AI may inhibit the development of those skills, I ask that you refrain from employing AI tools in this course. Using such tools for any purposes, or attempting to pass off AI-generated work as your own, will violate our academic integrity policy. I treat potential academic integrity violations by […]

If you’re unsure about whether or not a specific tool makes use of AI or is permitted for use on assignments in this course, please contact me.

You’ll need to fill in those ellipses with your own words, but this is a good start on a conversation with students about use of generative AI in their learning process in your course.

If you’re more open to student use of generative AI tools in your course, then read the section titled “Use of Generative AI Permitted (with or without limitations).” That section offers language to talk about the ways AI tools can support or hinder learning, appropriate uses of generative AI tools in your course, and options for disclosing one’s use of an AI tool on an assignment (e.g. the APA’s newly issued recommendations). That section also lists a number of AI tools as a reminder that we’re not just talking about ChatGPT here.

The recommendation section of the syllabus template expands on that idea:

As you craft your policy, please keep in mind that students may encounter generative AI in a variety of programs: chatbots like ChatGPT; image generators like DALL-E or Midjourney; writing and research assistants like Wordtune, Elicit, or Grammarly; and eventually word processing applications like Google Docs or Microsoft Word. Consider incorporating flexibility into your guidelines to account for this range of tools and for rapid, ongoing developments in AI technologies.

There’s also a caution about the use of AI detection tools:

Please be aware, too, that AI detection tools are unreliable, and use of AI detection software, which is not FERPA-protected, may violate students’ privacy or intellectual property rights. Because student use of generative AI may be unprovable, we recommend that instructors take a proactive rather than reactive approach to potential academic dishonesty.

After discussing the CETL Syllabus Template and its new language about AI, I shared a few ideas for revising assignments this fall in light of the presence of generative AI tools. I walked through an “assignment makeover” for an old essay assignment, a makeover that I detailed on my blog Agile Learning last month. In that post, I suggest six questions to consider as you rethink your assignments for the fall:

- Why does this assignment make sense for this course?

- What are specific learning objectives for this assignment?

- How might students use AI tools while working on this assignment?

- How might AI undercut the goals of this assignment? How could you mitigate this?

- How might AI enhance the assignment? Where would students need help figuring that out?

- Focus on the process. How could you make the assignment more meaningful for students or support them more in the work?

There were two big questions that emerged from the Q&A portion of the workshop. One, is there any practical way to determine if a piece of student work was ghostwritten by ChatGPT? Answer: No, not really. All the AI detectors are unreliable to one degree or another. Two, how might we teach students about the limitations of AI tools, like the fact that they output things that are not true? Answer: One approach is to have students work with these tools and critique their outputs, like these divinity school faculty did in the spring. (I think that example is my favorite pedagogical use of ChatGPT that I’ve encountered thus far.)

What’s next for this topic? Bob and I shared a few possibilities for UM instructors:

- Auburn University has opened its online, asynchronous “Teaching with AI” course to faculty across the SEC, which includes UM faculty. This course is a time commitment (maybe 10 to 15 hours), but it’s full of examples of assignments that have been redesigned for an age of AI. Reach out to Bob Cummings if you’re interested in taking this course.

- Marc Watkins, lecturer in writing and rhetoric and now also an academic innovation fellow at AIG, has also built a course available to UM instructors. It’s called “Introduction to Generative AI,” and it offers a lot for faculty, staff, and students interested in building their AI literacy, something Marc recommended in his recent Washington Post interview. To gain access, just contact me.

- This past summer, the UM Department of Writing & Rhetoric offered an AI summer institute for teachers of writing. At some point this fall, they’re planning to offer the institute again for UM faculty, but with a broader scope. Keep an eye out for announcements about this second offering.

- Here at CETL, our staff of teaching consultants are available to talk with UM instructors about course and assignment design that integrates or mitigates the use of generative AI. You’re welcome to contact me or any of our staff with questions.

- Finally, we’re planning two more events in this CETL/AIG series on teaching and AI, both on Zoom later this semester. “Teaching in the Age of AI: What’s Working, What’s Not” is scheduled for September 18th, and “Generative AI in the Classroom: The Student Perspective” is scheduled for October 10th. See our Events page for details and registration.